Archive for the ‘Tangible User Interface’ Category

Frontline Gloves – prototype presented for TUI exhibition at CIID

This is the description of the Frontline gloves concept for firefighters as presented in the Tangible User Interface exhibition at CIID on 31st Jan 2009.

Frontline - Networked gloves for firefighters

What is it?

A pair of networked gloves that allow two firefighters to communicate with each other by using hand gestures in a firefighting situation.

Who is it for?

Firemen working in teams of two.

Why is it valuable?

Typically firemen need to operate as a tightly knit unit in a firefighting situation. Constant communication with one another and rapid assessment of the changing environment is key to their safety and effectiveness. The conditions can be extreme, with hazardous objects in their path, or with smoke so thick that visibility is too low to scope the size of the space they are operating in.

The Frontline Gloves enable firemen to quickly scope a zero-visibility space by means of direct visual feedback about obstacles and clearances. Further, the gloves allow them to send instructions to the teammate by means of simple hand gestures. This reduces the need for spoken communication, saving the firemen precious air that would be used up in talking, and overcomes challenges of radio such as cross-talk.

How does it work?

Each glove contains custom made electronics and sensors that allows communication between them via a wireless protocol. The glowing of ultra-bright LEDs built into the glove indicate specific instructions.

What were the key learnings?

– Tangible User Interfaces have vast potential to address challenges faced by small teams of rescue workers, such as firemen, scuba divers etc. Screen-based interfaces demand a high degree of attention to operate, often challenging in the conditions these users find themselves in. A powerful answer is replacing screen driven-driven interaction with natural and gestural interaction.

– Designing solutions for niche user contexts like this one demands thorough user research and prototype iteration. This needs to be intrinsic to the design process adopted in the project. We interviewed researchers in the field of wearable computing for firefighting at the Fraunhofer Institute, Germany. This helped validate and refine our core assumptions about both the context of use and the design of the product a good deal.

Team Members

Ashwin Rajan and Kevin Cannon

Frontline gloves – Tech Testing

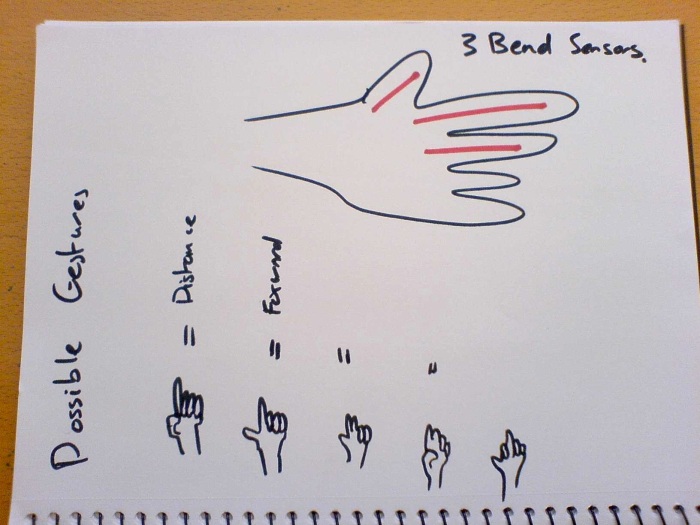

Critical technological components for demonstrating the value of the Frontline gloves concept were 1. the proximity sensor for sensing distances in smoky/darr/low visibility environments, and 2. basic communication between firefighters made possible by gesture recognition, using bend sensing technology. Putting together quick and dirty prototypes of these two components helped us test their viability early. Here are a couple of videos of the tests.

Testing Bend Sensors

Testing Proximity Sensors (Ultrasound)

Frontline gloves – an attempt at miniaturization

In our initials ideas, the Frontline gloves product concept consisted of two distinct parts connected by wires: 1. the upper hand area of the glove with proximity sensing and signalling capabilities and 2. the brain and software nestled into a pouch further up the arm (maybe integrated into the end of the long arm of the glove). The latter would consist essentially of an Arduino, a Xbee shied for wireless communication with the paired glove, and a battery pack for powering the whole setup.

But before long we found ourselves exploring ideas around miniaturizing the product (encouraged by teacher Vinay.V) by combining both parts in one – within the box on the hand of the glove itself (photo above). This, we learnt, would be possible if we got rid of the Arduino board by detaching its chip, clock and a few other components and mounting them on to a much smaller custom made board.

The integrated single-piece set up of components.

The Tradeoff

Going further, we decided to go with using the complete Xbee shield setup as is, including its board, for this prototype. As a result, we traded off using two seperate components connected by wires for a larger but single component fully integrated into the hand of the glove, including the battery pack.

The increasing size of the housing for components as prototyping progressed.

Testing how the box would fit and feel on the glove.

How the setup would fit together.

Frontline gloves – concepts and prototypes

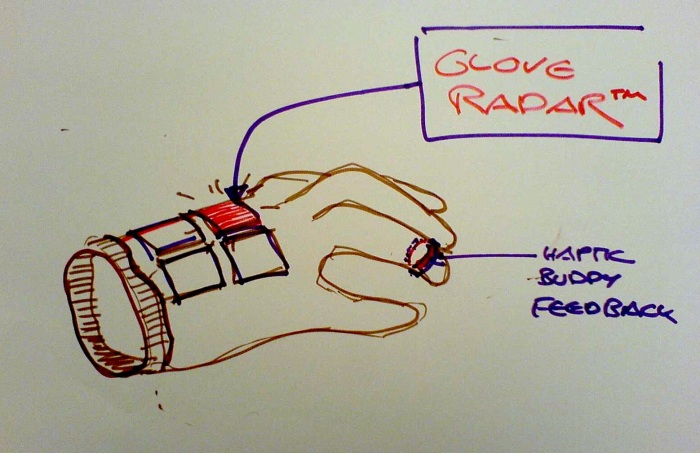

I posted a short note on our recent project in Tangible User Interfaces where we decided to work on wearables for firefighters. Here are some photos of initial sketches and prototypes. More about the actual features of the glove in posts ahead.

Rapidly created scenarios helped us better understand how technology-enhanced gloves could answer critical needs of firefighters in real fire situations.

Because we were working with a set of four or five critical user needs (finalized from researching papers and ongoing projects in wearables for firefighting), the first concept of our product became loaded with features – a classic case of ‘featuritis’.

An all-inclusive first version of the glove.

Exploring possibilites and uses of gesture recognition in the gloves.

Constant visual and verbal feedback from teachers helped iterate issues around form, function, interaction, physical limitations and user interface.

In feedback sessions from teachers - drawing by Alex Wiethoff.

In feedback sessions from teachers - drawing by Christopher Scales.

Bend sensors came up as a great option for adding gestural recognition possibilities in a prototype.

Soon we made the leap to testing and working with an actual prototypical glove.

Experimenting with a real glove helped explore issues of viability of gestures, user interface details, etc.

Toy View Workshop – Computer Vision Project 1

Continuing from describing the intent of the fascinating Toy View workshop in my last post, here are some images from the first experimental project we built – a collaborative dance game called Face-Off. The game was developed using motion tracking in Adobe Flash. It is played by two dancers who are prompted to dance or move based on visual feedback (while dance music plays in the background). Only the dancer who is prompted visually must move, while the other must stay absolutely still. The team of two dancers wins if they are each able to dance at the right time and keep the music going until the end of the song.

Initial sketches of the Face Off game

Simple storyboards to work out the specifics of gameplay.

Working out details of motion tracking on the webcam.

Screenshot of the game, which is projected on a vertical surface such as wall. A green box appears around the player whose turn it is to move.

Toy View Workshop – Computer Vision Project 2

In my last post I described the intent of the Toy View workshop held at CIID in December ’08, taught by Yaniv Stiener . Here are some of the projects we developed in the course of the workshop.

Reactable Game

Here is a video on the fascinating open-source Reactable technology – a collaborative music instrument with a multi-touch tangible surface-based interface – developed in Barcelona, Spain.

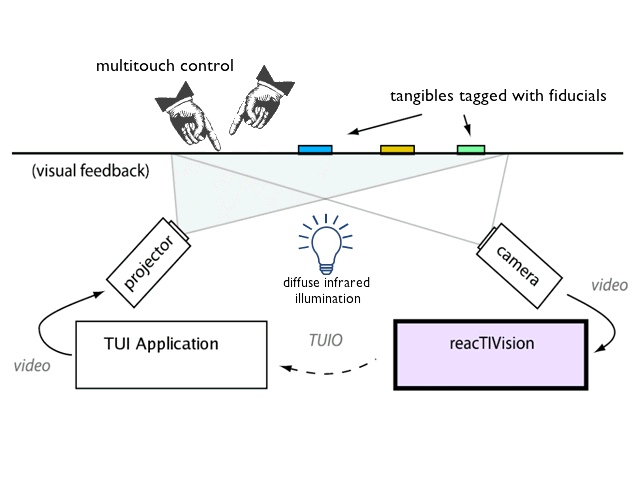

reacTIVision is an open source, cross-platform computer vision framework for the fast and robust tracking of fiducial markers attached onto physical objects, as well as for multi-touch finger tracking. The reacTIVision Engine and sample code, as well as Feducials (the markers used for tracking that can be attached to physical objects) can all be downloaded for free here.

How the reacTIVision technology works (courtesy http://mtg.upf.es/reactable/?software)

Annotated markers called 'Fiducials' can be pasted onto physical objects to faciliate tracking of object location and movements. (Courtesy http://mtg.upf.es/reactable/?software)

We built our own reacTIVision set up to develop a simple game during the course of this short workshop.

Experimenting with the reactable set-up.

We developed a simple game using images of CIID students and faculty including Yaniv. The game mixed the heads, torsos and legs of different people depending on the how the feducials were placed and turned. A lot of fun and laughs all around!

Screenshot of reactable game that mixes body parts from different pictures.

Toy View Workshop – Intent

Often the intent of a experimental workshop can have everything to do with what its participants learn, and the depth and innovation of its outcomes. One such was the ‘Toy View’ workshop, which we at CIID attented in late December ’08 with Yaniv Steiner of Nasty Pixel. It was an exercise in contemplating, iterating and building concepts for toys and games. I thought the intent of the workshop was very interesting and decided to provide it here:

Toy View – Description of the workshop

For thousands of years mankind kept on crafting toys, with very little change in shape or form. Can a new generation of toys emerge, combining both the physical aspect and the diversity of the digital world?

This workshop will provide students with both practical and theoretical knowledge in the field of computer vision, in relation to: Play, Games and Toys. The expected results are toys or games in which a person uses a physical object to interact with a digital environment.

Students will attempt to create innovative “magical” toys, that are physical – mostly appearing as physical objects or artifacts made from different natural and synthetic materials – and at the same time serve as controllers and actuators for functions dealing with digital data. Digital data can be a wide set of elements, starting from pure text and ending in audio, videos, images, and at times even social particles. The emphasis is on creating a new hybrid of physical computer games.

Illustration made during brainstorm about what makes toys playful and how interactivity plays a role in playfulness.

Structure

At first, students will learn to harness and manipulate different computer-vision tools by the use of a camera, that provides machines with the ability to ‘see’. This part of the workshop will focus on building artificial systems that obtain information from images in order to understand their surrounding environment. The camera in this case is correlated with the human eye. However, the human organ that actually decodes this information is the brain and not the eye – interpreting images as what humans grasps as ‘vision’. This first step will explore ways and techniques to craft such machine-vision.

The second part of the workshop will drive students into the world of Games and Play. By investigating classical computer games, physical games and toys, students will brainstorm the topic, experimenting in original ideas that will combine both physical ‘toy’ objects, and the digital world. Conceptually speaking – at this point, new interactions and games will emerge.

The third and final part of the workshop will focus on realization and crafting the above concepts. At the end of the workshop student will have a physical working prototype of their idea, the result, in the form of a new game that will be presented at the end of the course to fellow students and colleague.

The whole workshop description including technical and conceptual aspects is here.

Designing from the frontlines

We are currently exploring wearable computing solutions for firefighters in the line of duty. Navigation and orientation in extremely difficult environments (often on fire) with very low visibility is a huge challenge faced by firefighters everyday, and a key design challenge. Lots of interesting work happening in this area.

Still at the desktop and field research stage, here’s a great story I found today while browsing the net – the story of a firefighter who turned designer after being trapped himself in what turned out to be the perfect research situation. The page also has a good example of a very simple but effective video prototype (they could have shown more of the details of the actual device to make it even better IMO).

The Flexibility-Usability Tradeoff: an Illustration

I recently came upon a delighful little product on my flatmates’ shelf that illustrates the ‘Flexibility-Usability Tradeoff’ principle very well. The Bosch IXO is a great example to show that when you choose flexibility, you risk usability.

In this context, what is meant by usability may be far more apparent than what ‘flexibility’ means. One way I understand flexibility (especially in product design) is the range of distinct or largely unrelated features on a product. My Timex Ironman Triathlon digital wristwatch is a highly flexible device in terms of ‘operable’ features – it shows time, can be used as a chronograph (lap/split), has a 30-lap memory recall, Indiglo, countdown timer, alarms, two time zones, built-in setting reminders, and more. It has one screen, and a total of five buttons labeled ‘Mode’, ‘Start/Recall’, ‘Indiglo’, Stop/Reset’ and ‘Start Split’. Figuring out and using all of these with effortlessness, as you can guess, mandates a detailed manual and considerable practice.

The TIMEX Ironman Triathlon 30 Lap - requires the patience only a professional can spare.

The Bosch IXO, on the other hand, does one thing and one thing only, and boy does it do it well! Its a cordless electric screwdriver, period. The few controls it does provide broaden the feature set, but they represent a very different kind of flexibility from the Timex altogether – they are only features that enhance the core function of the product (see captions of images below).

Purpose meet elegance. The set of screw heads stack on the dock which also serves as the charger of the device.

A simple two-way pin that toggels from side to side can be pushed to set the working of forward or backward (screw or unscrew) motion.The big red button runs the device.

A set of three green LEDs (no other color states) are grouped on the top face of the device. They provide feedback when the machine is running (forward/back represented by arrows) or when the machine is charging (middle).

And finally, a light is directed at the source of working when the machine is run!

That’s really all there is to it. So simple to comprehend, such a delight to use.

User Interface at the Grocery Store

Language is a problem in Denmark, and though most Danes you meet speak great communicative English, they don’t bother too much with having English signs within physical built-up environment. Here’s one example of how this can be a nag: a weighing machine at the local IRMA grocery store. Since I don’t speak English, Danish text is kind of invisible to me – that is, I don’t really notice stuff written in Danish. Hence this machine was quite invisible to me when I had to look for it. I felt like I looked around quite a bit before I spotted something that was right in front of me.

It was surprisingly hard to spot the weighing machine amongst the stuff on display.

But finding it didn’t really solve my problem. They’ve apparently given the interface some thought, using pictures to depict the items you can weigh. But some pictures have had to be removed, (or simply fell off?) and those have just been replaced with words in Danish. The two items I chose from the shelves did not have pictures depicting them, so I spent another few minutes playing an interesting game going back and forth between the shelves where the food was stocked and the machine trying to match names. And not all the items on the shelves had names!